imkoy

back to the index

2. Engineering and Data Science (WORK IN PROGRESS)

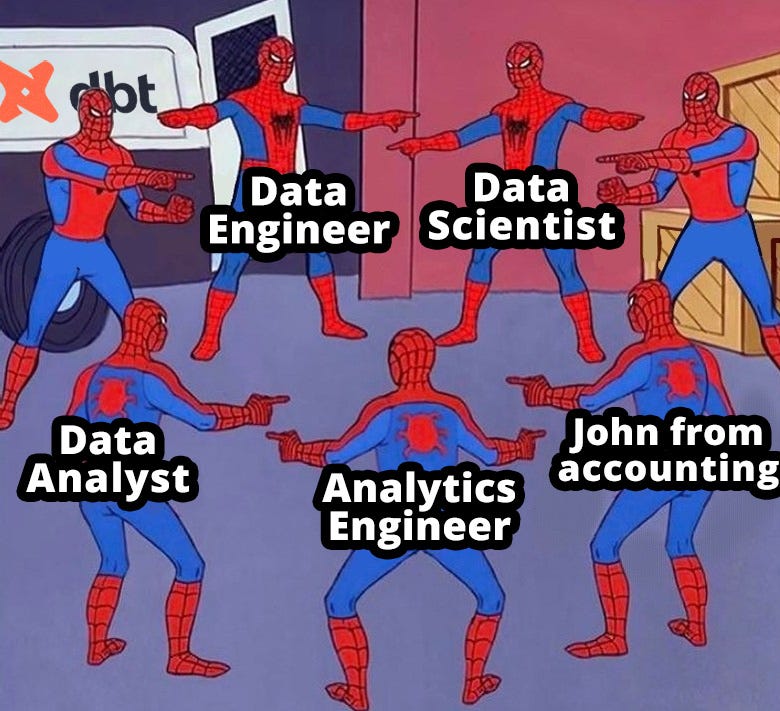

Initial note: If you are interest in learning data science I would recomend you to check textbooks used in formal universities such as "R for Data Science" by Hadley Wickham and Garrett Grolemund freely available online here. This blog is my take on the subject as Data Science is a broad topic, the image below summarizes it well.

All engineers engage with data as a fundamental aspect of their work. It is important as analyzing large amounts of data requires a solid understanding of statistics and math. Yet, working with raw data brings uncertainty as we may not fully grasp our current situation or where we are headed.

In my experience during my MSc, I frequently worked with thousands of data info. Initially, I used Excel for this task, we are all familiar with Excel. Excel is capable, powerful, and versatile, making it critical for making sense of data. However, there are many numerous software options, which offer a range of statistical and graphical techniques for analysis and visualization. As I worked with heavier case study datasets I discovered Excel was not entirely suited for a computationally demanding task. Seeking a faster alternative, I turned to other more capable alternatives that offered tools for mathematical modeling, simulation, algorithm development, and data visualization. Resulting more capable for various tasks requiring robust capabilities and handling large datasets. Some options require a paid license, although fortunately, some universities provide students with a free license.

An example of a trending alternative is Jupyther Notebook. When compared to other alternatives Jupyter Notebook is a great stepping stone, offering a dynamic space with numerous theoretical and practical exercises that teach concepts in the realm of data science. Jupyter Notebook can be confusing at first, particularly with extensive code, and you might prefer a smoother, more streamlined experience. For this reason, learning shortcuts can be a good idea as it facilitate navigation. You can display all shortcuts by entering command mode and pressing 'H'. Some of the most important are 'b' that add a cell below and 'a' to add one above. You call also show the documentation (named Docstring) for objects typed in the code cells by pressing 'Shift + TAB'. Comments can be added using 'Ctrl + /' or '#' before the code. The general idea is similar to other softwares, but the great thing about Jupyter Notebook is its ease of editing and modifying specific portions of code, and its ability to facilitate sharing of your analysis, which is probably its biggest advantage.

2.X. Learning Python project

Generally when starting a new project in python you should create a new environment with Conda to avoid conflict with future projects following the "conda create" command.

However, this can be done under the anaconda navigator app.

You can tell in which environment you are in by looking at the first word of your Python navigator and you can switch environments following the command:

Some repositories require users to install project requirements, these are generally listed under a requirements.txt file that you can clone and call following a pip command on your terminal. For example:

I was interesting in deep-learning algorithms. This deep-learning contained a number of commandline arguments from which the project could be called. They were located under a .py file named detect.

Following the command arguments defined we were able to run the model by excuting the command which tells the tool to run under my webcam service.

The algorithm I was testing has multiple modes listed under its repository that can be called to achieve different processing times and results.

These can be called in the same way we called the first instance of the algorithm such as:

In another project, I experimented with the dplyr library for manipulating mammal species data points, including filtering columns and rows, adjusting formatting, and merging different data sources. dplyr was fun and useful and has a vast amount of documentation making it "easy" to navigate. I have exported a simplified GIS web-map here for reference.

2.X. Conclusion

Data Science is a deep topic that you never stop learning, it is constantly evolving and there are a numerous number of packages and functions constantly being released. The real challenge could be to identify where to look at, to have the time to work on the project, and have quality data.

Post date: 2024-03-02

Last edit: 2024-08-10